Here Are Two Tips for Picking Out Misleading Stats in the News

I used these, and it led to this newsletter’s first official correction of a scientific journal article

Welcome to Range Widely. If you just subscribed, check out the intro post to see what this newsletter is all about.

If you like today's post and want to share it, click below.

In the last post, I wrote about how a headline-grabbing new study — which said that listening to AC/DC at high volume improved surgeons’ performance — was misleading. (And not just because the study involved neither surgeons nor surgery.) If you want the details, you can see the last post here.

I also thought I spotted an outright mistake in the study, but I didn’t write about it; I wanted to contact a member of the research team first. So I did that, and got a response. The result, readers, is that Range Widely notched its first official correction to a peer-reviewed journal article.

Much more importantly, I think there are two lessons here for smoking out mistaken conclusions in science and science news. Lemme explain:

Here is a stat that ran in many of the articles that covered the study. (This specific line is from the New York Post.)

“In trials, those listening to Highway To Hell and T.N.T. saw the time needed to make a precision cut drop from 236 seconds to 139.”

That stat was so ubiquitous in news coverage that I assume a press release was issued featuring it, and publications just reprinted it. That happens a lot.

One of my best tips for assaying science news is:

When you see a stat, slow down and consider for a moment whether the numbers make sense.

(You can practice Fermi estimation, which I’ve written about before, to develop better number sense.)

In this case, my eyebrow raised. That stat is saying that flipping on AC/DC decreased the time study subjects needed to make a cut from 4 minutes to less than 2.5 minutes. That didn’t seem likely to me. The task must be fairly simple if it can be accomplished in a few minutes, and I just found it unlikely that a tiny intervention like flipping on music would make such a dramatic difference in a straightforward task.

The magnitude of improvement felt to me like someone going from a 9-minute mile to a 5:15. That might be possible with very extensive training, but it certainly isn’t going to happen with some trifling intervention. This brings me to my second tip:

Tiny interventions almost never lead to massive effects.

Large effects usually require large interventions.

Science and health journalism, and scientific journals, are rife with promises of small fixes that cause huge results. That work almost never holds up in the long run. It’s not impossible, but when you see a tiny intervention that supposedly leads to a huge impact, start with skepticism. The scientific graveyard is littered with those.

Hone Your Science-News Spider-Sense

Having my science spider-sense triggered on two counts — numbers didn’t seem likely; tiny intervention with huge effect — I went back over the paper in detail.

The text of the paper does indeed affirm the news coverage; the news articles (probably following from a press release) just rounded the numbers, but reported on the text of the paper accurately. The paper reads:

“...participants were faster in performing precision cutting (139.4 vs. 235.8, P = 0.0009) without compromising the accuracy.”

I was surprised. Initially, I guessed that the mistake was probably in a press release that the articles used, or in a single news article that others then relied upon. But that wasn’t it. The stat is in the actual scientific paper. Then I looked at the data table in the paper, and saw the problem.

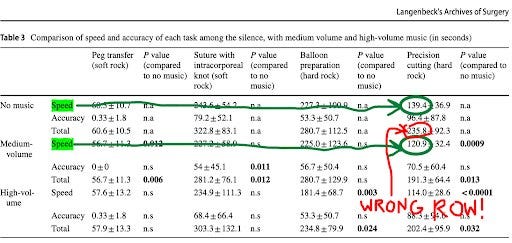

Here’s the table:

Notice that 139.4 is in the “speed” row; it’s the number of seconds that it took the participants to make precision cuts with no music playing. But 235.8 is in the “total” row; that number is the sum of the speed score *plus* an accuracy score that is right above it.

So the text of the article does not agree with the data table in the very same article. The text numbers — which appeared all over the news — are doing an apples-to-oranges comparison. It looks like the improvement that seemed, to me, too good to be true was, in fact, not true.

The real speed improvement listed in the study with medium-volume AC/DC was from 139.4 seconds to 120.9 seconds. That’s much more believable, and probably wouldn’t have triggered the ole spider senses. (Again, I still don’t believe the speed improvement was caused by the music; for more on that, see this post.)

What worries me here is that the numbers — which were trumpeted in a bunch of news articles — seemed implausible to me, someone who knows nothing whatsoever about research on the influence of music on surgical skills. We all make mistakes constantly. But the fact that this particular mistake in the text (and presumably the press release) didn’t stop the paper authors — or their peer-reviewers — in their tracks is not encouraging.

So I wrote to one of the study authors to suggest that a mistake had probably been made. The author kindly replied, confirmed the mistake, and told me that the journal has been informed of the need for a correction. I really appreciate that. But, unfortunately, the correction obviously won’t make headlines.

We can’t improve the news we read, but we can improve how we respond to it:

1) stop to think about numbers in the news (and practice Fermi estimation; it’s tough, but you’ll improve!), and

2) be skeptical of small interventions that promise big effects.

Thank you for reading — and thanks to everyone who commented on the last post.

If you think this post might help improve science-news literacy, please share it. (Tag me on Twitter or Instagram so I can thank you.)

As always, you can subscribe below.

Until next time…

David

P.S. In response to last week’s post, reader and anesthesiologist Ali MacDonald shared this Wired article in the comments. (Ali is featured in the article.) It notes that music can be helpful for surgeons, but that it is often played too loudly, which can hamper communication or cause a surgical team to miss important alarms.

Hello David. I'm thoroughly enjoying reading the back-catalogue of this blog, having only recently discovered it (I read Range last year — and as a generalist myself, loved it for the confirmation bias *and* the content 😌 ). I wonder if you're able to update links? The references to Fermi estimation, for example, go to a now dead link at bulletin.com. Do you have the same articles here, perhaps, that you could update the link with?