Fact-Checking and the Illusion of Explanatory Depth

I don't know how I know, I just know... or do I?

This post was going to be a short note saying that I might not post for a bit. Instead, I figured it would be more interesting to write about what, precisely, has me so busy: fact-checking.

My new book is in finished-enough form that I hired someone to torture me on a daily basis by combing the manuscript for errors. So far the necessary changes have been numerous but blessedly small. Still, they take up an enormous amount of time. For example:

At the start of one chapter, I tell the story of an event promoter. In giving a very brief (four sentences) chronology of her early life, I describe a relevant event at age fourteen (getting into a music club), then another at sixteen (going to a particular performance at a music festival), and a second that same year (getting invited to be a promoter). My descriptions came from interviews with the promoter. Enter Emily Krieger, fact-checker extraordinaire.

Emily proved to me that the promoter could not have been sixteen during one of the events that I wrote as occurring when she was that age. She must have been — drum roll — seventeen. The promoter had slightly misremembered the timing of a performance that occurred a mere half-century ago. It happens! No big deal — except it messes up my chronology. I can’t have the brief bio read: “X happened when she was fifteen. At seventeen, Y happened. And then at sixteen, Z.”

I won’t bore you with the details (yet), but I also can’t just swap the sentences because of the way the passage leads into a larger story. So I will come up with some other solution. It’s a minor fix, but there are dozens of instances like this. Each one takes time to digest, verify, and resolve.

Dates are straightforward; broad claims are another beast entirely. Beyond clear facts, Emily is performing a slightly more nebulous but particularly crucial audit of my blind spots. Throughout the manuscript, there are plenty of spots where I give some blanket statement drawn from my general knowledge or experience. For example, at one point in the book, I write that the notion that creativity and originality are synonymous did not emerge until the late eighteenth century.

When I write a broad statement like that, I’m positive it’s true. And then Emily comes upon it and checks my citations to see how I back it up. If there’s no citation, and she isn’t convinced, she’ll leave a note that amounts to “How do you know?” Gulp. I just know! I read it someplace! Many places! After five minutes of protesting in my own mind, I get to work figuring out if I actually have credible sources.

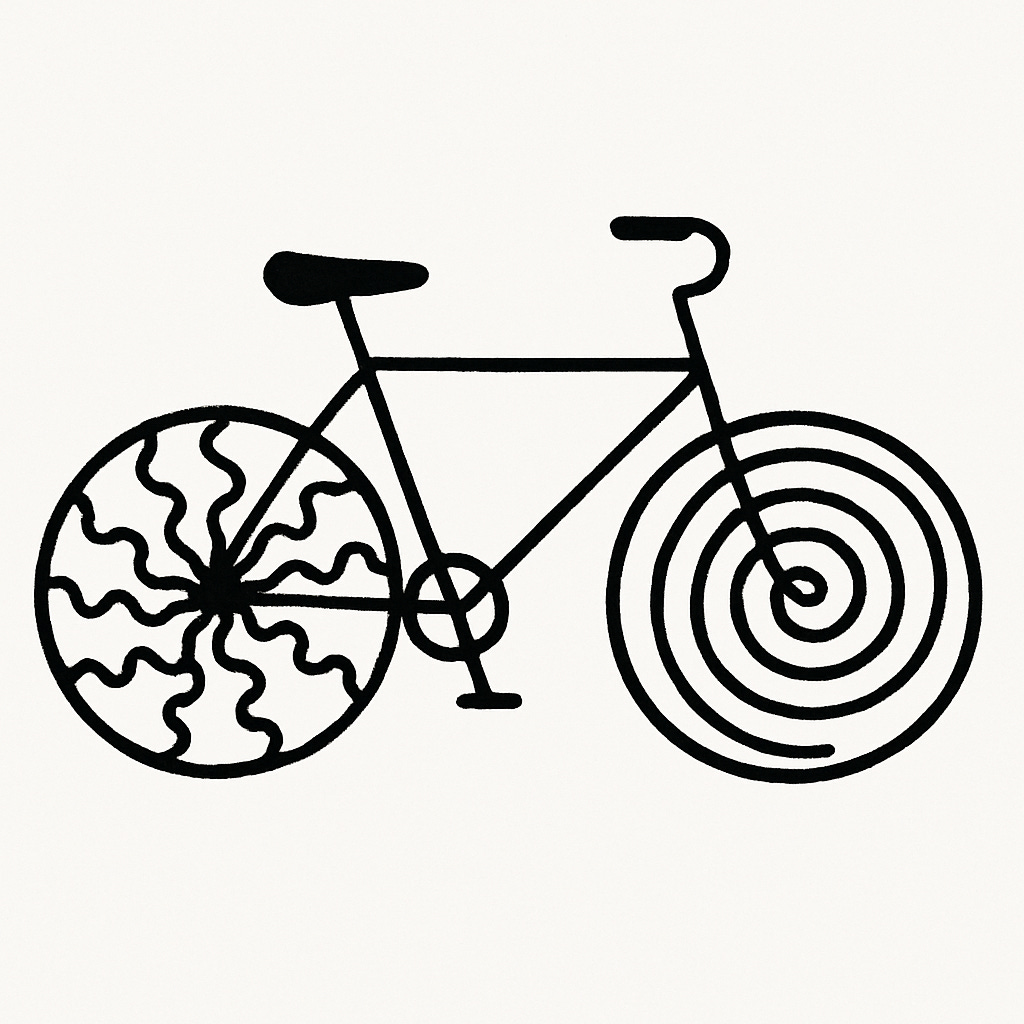

What Emily is doing is helping me avoid what psychologists know as “the illusion of explanatory depth” — a cognitive bias that describes our tendency to believe we understand something better than we really do because we gloss over the details. At least until they hit us in the face. A famous example: Do you know how a bicycle works? Of course you do. Okay, draw a bicycle. Most people find that they can’t do it accurately.

The illusion of explanatory depth typically refers to how we understand the workings of everyday mechanisms. But I think the general concept applies much more broadly. Until we’re asked to explain how we know what we know, we’re bound to make some unfounded assumptions.

This is a particular challenge for big-picture thinkers: if they’re mired in every detail, they can’t do their strategic work. In my own book-writing process, I try to strike a balance, with the help of processes and other people. Early on, I spend a lot of time thinking about the overall structure and scope of the book, and I talk through big ideas with my editor, Courtney Young. But compiling citations as I go keeps me attentive to important details. By the time I get to fact-checking, the strategic thinking is all behind me, and it’s time to get mired in details. I feel free to think big-picture while I’m writing in part because I know the fact-checking process will help mitigate the illusion of explanatory depth later on.

As I’ve gotten more experienced, I think I have fewer blind spots. But I would say that, wouldn’t I? At least now I’m aware that I will always have blind spots. Being aware of cognitive biases, sadly, does not cure you of them. Fortunately, systems and other people can help.

Thanks for reading. If you have systems (or people) for balancing your big- and little-picture thinking, I’d love to hear about it in the comments below. I’m curious how this manifests in other domains.

If you enjoyed this post, share it — or send it as a passive-aggressive note to that friend who drops grand pronouncements without sources.

And you can subscribe here:

Until next time…

David

I appreciate your sharing the "illusion of explanatory depth" bias. I experience a version of this in a large gen ed course I teach focused on social media. I ask students to draw the internet and we look at the drawings to see the range of ways in which we understand or visualize the internet. We agree that "the internet" is something most of us act like we know; we nod our heads and say the internet, or wireless, or the cloud without really understanding these concepts/technologies in depth. So, I appreciate your discussion of this bias in light of fact-checking!

It's so hard, and yet can be critical, to know where our blind spots are! In coaching leaders/preparing candidates for interviews I've started asking "What is a piece of feedback you've received that you disagreed with?" It prompts reflection on the disconnect between self-perception and others' perceptions of us, which is more likely to become a real problem in the future than our known weaknesses. If we can identify the areas where we are most prone to over-estimate our knowledge or abilities, then we can engage external support (like your fact-checker) or at least force ourselves to slow down and question our assumptions.